Your Problem-Solving Companion (ItsAweso.me Series)

This guide serves as your comprehensive study companion for mastering data structures and algorithms. Whether you are preparing for technical interviews or building stronger problem-solving skills, this series establishes the foundation necessary for success.

How to Use This Guide:

- After reading: Review the concepts and examples

- Before practice: Refresh your understanding of key topics

- During interviews: Use as a quick reference for important concepts

- For deeper learning: Explore the additional examples and explanations

Remember: Real learning occurs through active practice and problem-solving. Use this guide to support your hands-on coding practice.

Table of Contents

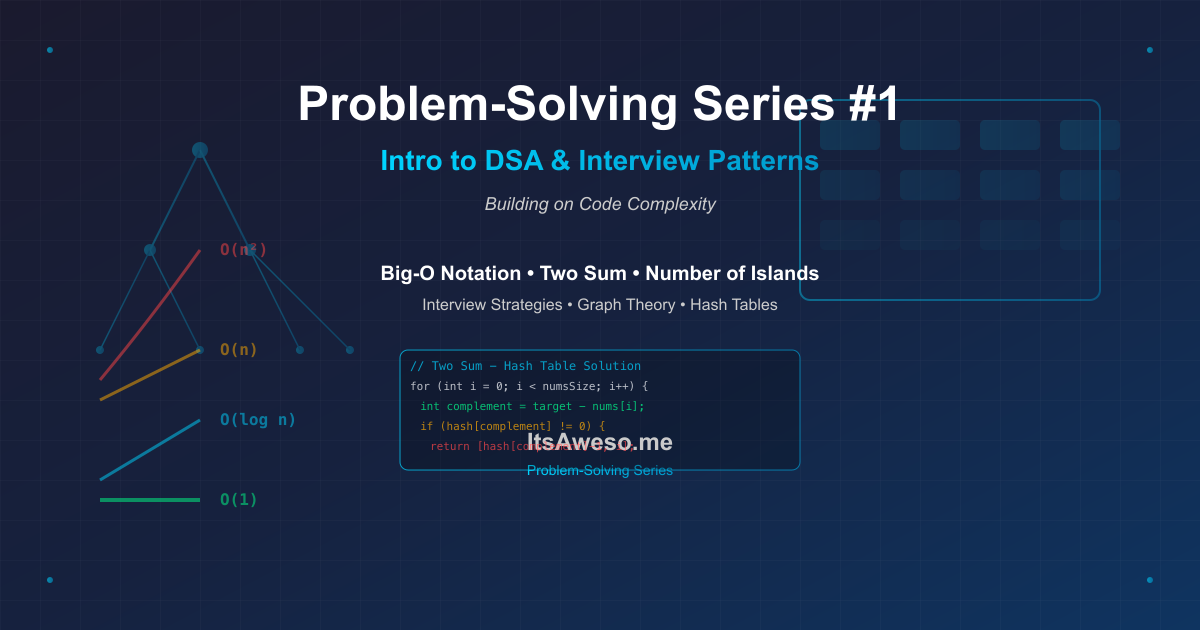

- Series Context: Building on Code Complexity

- Asymptotic Analysis Foundation

- Interview Expectations and Problem Categories

- Hands-on Programming Session

- Problem 1: Two Sum — Insight, Approach, Complexity

- Problem 2: Number of Islands — Graph Thinking and Implementation

- Problem-Solving Methodology Demonstrated

- Language Choice in Competitive Programming

- Complete Source Code Implementations

- Practice Recommendations

- Session Recap: Your Action Plan

TL;DR

- Big-O notation determines interview success: understanding algorithmic complexity separates qualified candidates from those who fail

- 30-minute interview strategy: Read problem (5 min) → Plan approach (5 min) → Implement solution (10 min) → Test and debug (5 min) → Optimize and explain (5 min)

- Six-step problem-solving methodology: Read thoroughly, ask clarifying questions, start with simple solutions, optimize strategically, test comprehensively, communicate professionally

- Two Sum key insight: Use hash tables for O(1) lookups, trading space for time to achieve O(n) complexity instead of O(n²) brute force

- Number of Islands key insight: Recognize as connected components problem, use DFS/BFS to explore and mark islands, achieving O(m×n) time complexity

- 30-day structured practice roadmap: Week 1 (fundamentals), Week 2 (patterns), Week 3 (speed and accuracy), Week 4 (mock interviews)

- Success metrics: Solve Easy problems in 15 minutes, Medium in 25 minutes, achieve 70%+ first-attempt success rate on Easy problems

Series Context: Building on Code Complexity

This post builds directly on the earlier analysis of Big-O notation and code complexity (see Code Complexity: A Dive into Various Aspects on ItsAweso.me). While this content stands alone, reviewing growth patterns will enhance your understanding.

Teaching Flow: From Foundation to Implementation

Topic 1: Asymptotic Analysis Foundation

The Problem: In technical interviews, candidates often struggle when asked about time complexity. While their code may function correctly, they cannot explain why it is efficient or how it scales with input size. This gap in understanding separates successful candidates from those who fail.

The Solution: Understanding Big O notation provides the foundation for algorithmic thinking and efficient problem-solving.

The "Why" Before the "What"

Common Interview Scenario:

- Candidate: "My solution works!"

- Interviewer: "Excellent! But what if the input size grows from 1,000 to 1,000,000?"

- Candidate: "It should still work..."

- Interviewer: "Let's move on..."

The Reality: Interviewers evaluate not just working code, but understanding of scalability and algorithmic efficiency.

Quantitative vs Qualitative: The "Speed" Analogy

Think of it like car shopping:

| Quantitative (Exact Numbers) | Qualitative (Big O Style) |

|---|---|

| "0-60 mph in 3.2 seconds" | "This car is fast" |

| "Top speed 180 mph" | "This car scales well" |

| "Fuel efficiency 25 mpg" | "This car is efficient" |

Big O is like saying: "This algorithm is fast" rather than "This algorithm takes exactly 2.5 seconds"

The Richter Scale Moment: Understanding Logarithms

Understanding Logarithms: Consider the Richter scale from seismology:

Magnitude 4.0 → 5.0 = 10x stronger

Magnitude 4.0 → 6.0 = 100x stronger

Magnitude 4.0 → 7.0 = 1000x stronger

Now apply this to algorithms:

| Input Size | O(log n) Steps | O(n) Steps |

|---|---|---|

| 10 | 1 | 10 |

| 100 | 2 | 100 |

| 1,000 | 3 | 1,000 |

| 10,000 | 4 | 10,000 |

Key Insight: Each step in O(log n) reduces the problem size by approximately 90%.

Big O Notation: Performance Analysis

Visual Growth Patterns:

Input Size: 10 100 1K 10K 100K

O(1): 1 1 1 1 1 ← Instant!

O(log n): 1 2 3 4 5 ← Super fast!

O(n): 10 100 1K 10K 100K ← Linear

O(n²): 100 10K 1M 100M 10B ← Disaster!

Real-World Impact:

- O(n²) on 100K elements: Program performance degrades significantly

- O(n) on 100K elements: Program performs acceptably

- O(log n) on 100K elements: Program executes efficiently

Why This Matters in Interviews

Interview Expectations:

- Easy Problems: Interviewer expects

O(n)or better complexity - Medium Problems: Interviewer expects

O(n log n)or better complexity - Hard Problems: Interviewer expects optimal complexity

Competitive Advantage: Understanding Big O notation enables you to:

- Choose appropriate algorithms before implementation

- Explain solution efficiency with confidence

- Optimize when asked for improvements

- Avoid solutions that will timeout on large inputs

Reference: The Big O Cheat Sheet provides comprehensive complexity analysis for common algorithms and data structures.

Topic 2: Interview Expectations and Problem Categories

The Interview Reality: Technical interviews typically involve solving algorithmic problems within a 30-minute timeframe. Success depends on both technical ability and effective communication.

Key Factors in Technical Interviews:

- Most candidates fail not due to inability to code, but lack of understanding of interview expectations

- Time management is critical - every minute counts

- Communication skills are as important as coding ability - candidates must articulate their thought process

The Time Pressure Reality

The 30-Minute Countdown:

Minutes 0-5: Read & Understand Problem

Minutes 5-10: Ask Questions & Plan Approach

Minutes 10-20: Implement Solution

Minutes 20-25: Test & Debug

Minutes 25-30: Optimize & Explain

Critical Point: Spending excessive time on problem understanding reduces available time for implementation and testing.

Problem Difficulty Levels

| Level | Time Limit | What Interviewer Expects | Your Goal |

|---|---|---|---|

| Easy | 15-20 min | Working solution, clean code | Get it right, get it fast |

| Medium | 20-30 min | Optimized solution, good complexity | Show you can think algorithmically |

| Hard | 30-45 min | Optimal solution, edge cases handled | Demonstrate advanced thinking |

Focus Area: Most interviews concentrate on Easy-Medium problems. Master these difficulty levels first.

Problem Categories: Essential Techniques

Core algorithmic patterns and data structures for technical interviews:

Fundamental Techniques (Essential)

- Arrays & Strings: Core data structures - appear in 40% of problems

- Hash Tables: Versatile tool for frequency counting, duplicate detection, caching

- Two Pointers: Efficient technique for array and string processing

Intermediate Techniques (Important)

- Stacks & Queues: Bracket matching, BFS/DFS implementations

- Trees & Graphs: Connected components, path finding, traversal algorithms

- Sliding Window: Subarray and substring optimization

Advanced Techniques (Specialized)

- Dynamic Programming: Optimization technique for overlapping subproblems

- Binary Search: Logarithmic time complexity for search operations

- Advanced Data Structures: Heaps, tries, segment trees

The Interview Strategy: Systematic Approach

Six-Step Problem-Solving Process:

Step 1: Thorough Problem Analysis

- Read the problem multiple times - avoid skimming

- Identify key constraints - input size, time limits, edge cases

- Create examples - visualize the problem with concrete data

Step 2: Clarify Requirements

- "What if the input is empty?"

- "Are there any constraints on the input size?"

- "Should I handle edge cases like duplicates?"

- Professional Practice: Asking clarifying questions demonstrates systematic thinking

Step 3: Implement Initial Solution

- Start with brute force approach - prioritize working code

- Avoid premature optimization - working code is better than perfect code

- Monitor time allocation - if brute force exceeds 10 minutes, reassess approach

Step 4: Strategic Optimization

- Identify performance bottlenecks - analyze what makes the solution slow

- Apply appropriate techniques - hash tables, two pointers, etc.

- Document optimization rationale - "This reduces complexity from O(n²) to O(n)"

Step 5: Comprehensive Testing

- Test edge cases first - empty input, single element, large input

- Trace through code execution - verify logic step by step

- Check for boundary errors - off-by-one errors are common pitfalls

Step 6: Professional Communication

- Articulate thought process - help interviewer follow your reasoning

- Justify design decisions - "I chose a hash table because..."

- Acknowledge trade-offs - "This uses more space but improves time complexity"

Common Interview Pitfalls

| Pitfall | Impact | Prevention |

|---|---|---|

| Rushing to code | Solving incorrect problem | Read multiple times, ask clarifying questions |

| Silent coding | Interviewer cannot follow logic | Articulate thought process, explain decisions |

| Ignoring edge cases | Demonstrates incomplete analysis | Test empty, single element, and large inputs |

| Not optimizing | Shows limited understanding of efficiency | Analyze complexity, suggest improvements |

| Giving up too early | Indicates lack of persistence | Start with brute force, then optimize |

Remember: The interviewer wants you to succeed. They are testing your problem-solving process, not just your coding ability.

Topic 3: Hands-on Programming Session

Live Problem Solving: Observe the systematic approach to coding problems. The focus is on the thinking process that distinguishes successful candidates.

Learning Objectives:

- Problem analysis techniques - systematic breakdown of complex problems

- Algorithm selection - choosing appropriate data structures and approaches

- Implementation strategy - translating algorithmic concepts into working code

- Optimization techniques - improving solution efficiency

Problems Covered:

- Two Sum - Introduction to hash table optimization

- Number of Islands - Graph theory and connected components

Learning Approach: Analyze each solution step-by-step. Consider alternative approaches and optimizations.

Problem 1: Two Sum — Insight, Approach, Complexity

The Problem: Find two numbers that add up to a target. While conceptually simple, this problem demonstrates the importance of considering algorithmic efficiency before implementation.

Problem Statement:

Given an array of integers nums and an integer target, return indices of the two numbers such that they add up to target.

Example:

Input: nums = [2, 7, 11, 15], target = 9

Output: [0, 1] // Because nums[0] + nums[1] = 2 + 7 = 9

Problem Analysis and Solution Development

Step 1: Initial Brute Force Approach

// Brute force approach - inefficient

for (int i = 0; i < n; i++) {

for (int j = i + 1; j < n; j++) {

if (nums[i] + nums[j] == target) {

return [i, j];

}

}

}

Analysis: This approach has O(n²) time complexity. For large inputs (100,000 elements), performance degrades significantly.

Step 2: Key Insight - Complement Search

Optimization Strategy: Instead of searching for pairs, search for complements.

- For each number

nums[i], calculate the required complement:target - nums[i] - If the complement has been seen before, we have found our pair

Step 3: Hash Table Implementation

// Optimized solution using hash table

int complement = target - nums[i];

if (hash[complement] != 0) {

// Found the pair!

}

hash[nums[i]] = i + 1; // Store for future lookups

Efficiency: Hash table provides O(1) average-case lookup time, enabling linear time complexity.

Implementation Strategy

Data Structure Selection:

- Hash Table: Optimal for O(1) lookups

- Array-based Hash: Simple and efficient for integer keys

- Zero Initialization: Using

calloc()to avoid initialization issues

Core Algorithm Implementation:

// Iterate through each number in the array

for (int i = 0; i < numsSize; i++) {

// Calculate the required complement

int complement = target - nums[i];

// Check if complement has been seen before

if (hash[complement] != 0) {

// Found the pair - return indices

result[0] = hash[complement] - 1; // Convert back to 0-based index

result[1] = i;

return result;

}

// Store current number for future lookups

hash[nums[i]] = i + 1; // +1 to distinguish from uninitialized 0

}

Implementation Details:

- Index Management: Store

i + 1to distinguish from uninitialized0 - Early Termination: Exit immediately upon finding the solution

- Memory Management: Proper

malloc()/free()to prevent memory leaks

Key Teaching Points

1. Memory Management

int *hash = calloc(1000, sizeof(int)); // Zero-initialized

// ... use hash table ...

free(hash); // Always free allocated memory

2. Edge Case Handling

- No solution exists: Return

[-1, -1] - Duplicate numbers: Handle gracefully

- Empty array: Check array size first

3. Index Management

- 0-based vs 1-based: Maintain consistency and document your approach

- Off-by-one errors: Common source of bugs in array processing

4. Optimization Strategy

- Start with brute force: Prioritize working solution

- Identify bottlenecks: Nested loops create quadratic complexity

- Apply appropriate tools: Hash table for O(1) lookups

- Document rationale: "I chose hash table because..."

Key Learning: This problem demonstrates the fundamental trade-off of space for time. Hash tables enable efficient lookups at the cost of additional memory.

5. Complexity Analysis:

- Time Complexity:

O(n)- Single Pass: We iterate through the array exactly once

- Hash Table Operations:

O(1)average case for insert and lookup - Total Operations: n iterations ×

O(1)hash operations =O(n) - Why O(n): Each element is processed once, hash table provides constant-time access

- Space Complexity:

O(n)- Hash Table Storage: Stores at most n elements (one for each array element)

- Worst Case: When no solution exists, we store all n elements

- Best Case: When solution is found early, we store fewer elements

- Memory Growth: Scales linearly with input size

- Comparison with Brute Force:

- Brute Force Time:

O(n²)- nested loops checking every pair - Brute Force Space:

O(1)- only uses constant extra space - Trade-off: Hash table solution trades space for time efficiency

- When to Use: Hash table preferred for large arrays, brute force for very small arrays

- Brute Force Time:

- Hash Table Considerations:

- Collision Handling: Rare in practice for this problem due to integer constraints

- Load Factor: Hash table performance remains

O(1)with good hash function - Memory Overhead: Additional space for hash table structure and collision resolution

Problem 2: Number of Islands — Graph Thinking and Implementation

The Problem: Given a 2D grid representing land and water, count the number of islands. While appearing as a geography problem, this is fundamentally a graph theory problem involving connected components.

Problem Statement: Given an m×n 2D binary grid representing land ('1') and water ('0'), count the number of islands. An island is surrounded by water and formed by connecting adjacent lands horizontally or vertically.

Example:

Input: grid = [

["1","1","0","0","0"],

["1","1","0","0","0"],

["0","0","1","0","0"],

["0","0","0","1","1"]

]

Output: 3 // Three separate islands

Key Insight: Graph Theory Application

Problem Analysis:

- Each island represents a connected component in a graph

- Adjacent cells function as connected nodes

- Objective: Find all connected components

Visual Representation:

Grid: Graph View:

1 1 0 0 0 A---B

1 1 0 0 0 | |

0 0 1 0 0 C

0 0 0 1 1 D---E

Islands: A-B (size 2), C (size 1), D-E (size 2)

Total: 3 islands

The Algorithm Strategy

Step 1: Incorrect Approach

// Incorrect - counts every land cell instead of islands

for (int i = 0; i < rows; i++) {

for (int j = 0; j < cols; j++) {

if (grid[i][j] == '1') {

count++; // This counts every land cell, not islands

}

}

}

Step 2: Island Sinking Strategy

Key Insight: When an island is found, mark all connected land cells as visited to prevent double-counting.

// Correct approach

if (grid[i][j] == '1') {

islands++;

dfs(grid, i, j); // Mark entire island as visited

}

Step 3: Depth-First Search Implementation

void dfs(vector<vector<char>>& grid, int i, int j) {

// Base cases: out of bounds or water

if (i < 0 || i >= rows || j < 0 || j >= cols || grid[i][j] == '0')

return;

grid[i][j] = '0'; // Mark as visited

// Explore all 4 directions

dfs(grid, i-1, j); // Up

dfs(grid, i+1, j); // Down

dfs(grid, i, j-1); // Left

dfs(grid, i, j+1); // Right

}

Implementation Strategy

Data Structure Choices:

- 2D Vector: Natural grid representation

- Direction Arrays: Clean way to handle 4-directional movement

- In-place Modification: Use the grid itself as visited marker

The Complete Algorithm:

int numIslands(vector<vector<char>>& grid) {

if (grid.empty() || grid[0].empty()) return 0;

int rows = grid.size();

int cols = grid[0].size();

int islands = 0;

for (int i = 0; i < rows; i++) {

for (int j = 0; j < cols; j++) {

if (grid[i][j] == '1') {

islands++; // Found a new island

dfs(grid, i, j); // Sink it completely

}

}

}

return islands;

}

Key Implementation Tricks:

- Boundary Checking: Always check bounds before accessing grid

- In-place Visited Marking: No need for separate visited array

- Early Return: Exit DFS as soon as we hit water or boundaries

Key Teaching Points

1. Graph Theory in Disguise

- Connected Components: Each island is a connected component

- Adjacency: Cells are adjacent if they share an edge (not corner)

- Traversal: DFS/BFS to explore connected components

2. The "Sink" Strategy

- Why sink?: Prevents double-counting the same island

- When to sink?: As soon as you start exploring an island

- What to sink?: The entire connected component

3. Recursion vs Iteration

- DFS: Naturally recursive, elegant code

- BFS: Iterative with queue, better space complexity

- Choice: DFS for simplicity, BFS for space optimization

4. Boundary Conditions

- Grid bounds: Always check

i < 0 || i >= rows - Water cells: Stop when you hit '0'

- Edge cases: Empty grid, single cell, all water

5. Space Optimization

- In-place marking: Use grid itself as visited array

- No extra space: Except recursion stack

- Alternative: Use separate visited array if you cannot modify input

BFS Alternative (For Space Optimization)

void bfs(vector<vector<char>>& grid, int i, int j) {

queue<pair<int, int>> q;

q.push({i, j});

grid[i][j] = '0';

int dirs[4][2] = {{-1,0}, {1,0}, {0,-1}, {0,1}};

while (!q.empty()) {

auto [r, c] = q.front();

q.pop();

for (auto [dr, dc] : dirs) {

int nr = r + dr, nc = c + dc;

if (nr >= 0 && nr < rows && nc >= 0 && nc < cols && grid[nr][nc] == '1') {

grid[nr][nc] = '0';

q.push({nr, nc});

}

}

}

}

BFS Advantage: Space complexity O(min(m,n)) instead of O(m×n)

Key Learning: This problem demonstrates how to recognize graph problems in disguise. Many grid problems are fundamentally graph traversal problems.

6. Complexity Analysis:

- Time Complexity: O(m × n)

- Explanation: Each cell in the grid is visited at most once

- DFS Contribution: Each land cell triggers a DFS that visits all connected land cells

- Total Visits: Even with multiple DFS calls, each cell is visited exactly once

- Why O(m × n): Grid has m rows and n columns, so total cells = m × n

- Space Complexity: O(m × n) in worst case

- Recursion Stack: In worst case, the entire grid could be one large island

- Maximum Depth: DFS could go as deep as m × n levels in recursion stack

- Example Worst Case: A grid filled entirely with '1's forming one large island

- Average Case: Much better, typically O(min(m, n)) for most realistic grids

- Optimization Note:

- BFS Alternative: Same time complexity but different space complexity pattern

- BFS Space: O(min(m, n)) - queue size is bounded by grid perimeter

- Choice: DFS is often preferred for simplicity, BFS for space optimization

Problem-Solving Methodology Demonstrated

- Systematic Approach:

- Read & Understand: Completely grasp the problem requirements

- Algorithm Selection: Choose appropriate data structures and algorithms

- Implementation: Write clean, working code

- Testing: Verify with examples and edge cases

- Code Organization:

- Driver Code: Set up test cases and main function

- Core Logic: Implement the algorithm cleanly

- Helper Functions: Break down complex operations

- Documentation: Clear comments explaining the approach

- Performance Considerations:

- Time Complexity: Always analyze and communicate

- Space Complexity: Consider memory usage

- Optimization: Start with working solution, then optimize

- Best Practices Emphasized:

- Confidence in Implementation: Get compilation working first

- Incremental Development: Build and test step by step

- Language Constructs: Choose appropriate loops, conditionals, and data structures

- Practice Philosophy: The 1000-hour rule for mastery through deliberate practice

Key Takeaway: Problem-solving is a skill developed through systematic practice. Focus on understanding the problem, choosing the right approach, and implementing it cleanly. The source code implementations below are for your reference and practice.

Language Choice in Competitive Programming

Language Selection Philosophy:

I used C and C++ in these examples as they are standard choices in competitive programming, but this is not a requirement. Many successful competitive programmers use Python, Java, JavaScript, or other languages.

Key Principles for Language Selection:

- Choose What You Are Comfortable With:

- Primary Factor: Pick a language you are familiar and comfortable with

- Learning Curve: Do not switch languages just for competitive programming if you are not proficient

- Confidence: You should be able to implement algorithms without struggling with syntax

- Language as a Tool:

- Problem-Solving First: The algorithm and logic matter more than the language

- Implementation Speed: Use what lets you code fastest and most accurately

- Debugging Ease: Choose what you can debug quickly under time pressure

- When Language Choice Matters:

- Built-in Data Structures: Some algorithms benefit from language-specific features

- BFS with Queue: C++ STL queue, Java Queue class, Python collections.deque

- Hash Tables: C++ unordered_map, Java HashMap, Python dict

- Sorting: Built-in sort functions vs implementing from scratch

- Performance Requirements: Some problems have tight time limits

- Library Availability: Certain algorithms have optimized implementations

- Built-in Data Structures: Some algorithms benefit from language-specific features

- Language Communities and Domains:

- Python: AI/ML heavy libraries, data science, rapid prototyping

- Java: Enterprise applications, Android development, strong typing

- C++: Systems programming, competitive programming, performance-critical code

- JavaScript: Web development, full-stack applications

- C: Embedded systems, low-level programming, understanding fundamentals

Bottom Line: Start with what you know best. Language proficiency comes from practice, and you can always learn new languages later. Focus on algorithmic thinking and problem-solving skills — these transfer across all programming languages.

Complete Source Code Implementations

Problem 1: Two Sum - C Implementation

/*

* Two Sum - C Implementation

* LeetCode #1

*

* Problem: Given an array of integers nums and an integer target,

* return indices of the two numbers such that they add up to target.

*

* Example:

* Input: nums = [2, 7, 11, 15], target = 9

* Output: [0, 1]

*

* Time Complexity: O(n²) for brute force, O(n) for hash table

* Space Complexity: O(1) for brute force, O(n) for hash table

*/

#include <stdio.h>

#include <stdlib.h>

int* twoSumHashTable(int *nums, int numsSize, int target)

{

int *hash = calloc(1000, sizeof(int));

int *result = malloc(2 * sizeof(int));

for (int i = 0; i < numsSize; i++)

{

int complement = target - nums[i];

if (hash[complement] != 0)

{

result[0] = hash[complement] - 1; // -1 for zero-based index

result[1] = i;

free(hash);

return result;

}

hash[nums[i]] = i + 1; // Store index + 1 to differentiate from zero

}

free(hash);

free(result);

result[0] = -1;

result[1] = -1;

return result;

}

int main()

{

int nums[] = {2, 7, 11, 15};

int target = 9;

int *result = twoSumHashTable(nums, sizeof(nums) / sizeof(nums[0]), target);

printf("Indices: [%d, %d]\n", result[0], result[1]);

return 0;

}

Problem 2: Number of Islands - C++ Implementation (Complete Version)

/*

* Number of Islands - LeetCode #200

*

* Problem Statement:

* Given an m x n 2D binary grid which represents a map of '1's (land) and '0's (water),

* return the number of islands. An island is surrounded by water and is formed by

* connecting adjacent lands horizontally or vertically.

*

* Example:

* Input: grid = [

* ["1","1","0","0","0"],

* ["1","1","0","0","0"],

* ["0","0","1","0","0"],

* ["0","0","0","1","1"]

* ]

* Output: 3

*

* Teaching Points:

* 1. Graph Traversal (DFS/BFS)

* 2. Connected Components

* 3. Grid-based problems

* 4. STL containers (queue, vector)

* 5. Direction arrays for 4-directional movement

*/

#include <iostream>

#include <vector>

#include <queue>

#include <string>

using namespace std;

void printGrid(const vector<vector<char>> &grid)

{

for (const auto &row : grid)

{

for (const auto &cell : row)

{

cout << cell << " ";

}

cout << endl;

}

}

void dfs(vector<vector<char>> &grid, int i, int j)

{

int rows = grid.size();

int cols = grid[0].size();

// Base case: out of bounds or reached water

if (i < 0 || i >= rows || j < 0 || j >= cols || grid[i][j] == '0')

return;

// visited

grid[i][j] = '0';

// cout << "Visiting: (" << i << ", " << j << ")" << endl;

// Explore all 4 directions (up, down, left, right)

dfs(grid, i - 1, j); // Up

dfs(grid, i + 1, j); // Down

dfs(grid, i, j - 1); // Left

dfs(grid, i, j + 1); // Right

}

int numIslands(vector<vector<char>> &grid)

{

if (grid.empty() || grid[0].empty()) return 0;

int rows = grid.size();

int cols = grid[0].size();

int islands = 0;

for (int i = 0; i < rows; i++)

{

for (int j = 0; j < cols; j++)

{

if (grid[i][j] == '1')

{

islands++;

dfs(grid, i, j);

}

}

}

return islands;

}

int main()

{

vector<vector<char>> grid = {

{'1', '1', '0', '0', '0'},

{'1', '1', '0', '0', '0'},

{'0', '0', '1', '0', '0'},

{'0', '0', '0', '1', '1'}};

cout << "Original Grid:" << endl;

printGrid(grid);

int result = numIslands(grid);

cout << "\nNumber of Islands: " << result << endl;

cout << "\nGrid after DFS (all islands sunk):" << endl;

printGrid(grid);

return 0;

}

Practice Recommendations

- Compile and Run: Try compiling and running these programs on your system

- Modify Test Cases: Change the input arrays/grids to test different scenarios

- Add Debugging: Add print statements to understand the execution flow

- Implement Brute Force: Try implementing the O(n²) brute force solution for Two Sum

- Try BFS: Implement Number of Islands using BFS instead of DFS

- Memory Management: Practice proper memory allocation/deallocation in C

- STL Practice: Experiment with different STL containers and algorithms in C++

Remember: The key to mastering these problems is consistent practice and understanding the underlying concepts rather than memorizing code!

Session Recap: Your Action Plan

What We Just Mastered

1. The Big O Superpower

- You now understand: Why O(n²) is inefficient and O(n) is optimal

- You can explain: How algorithms scale with input size

- You can choose: The right algorithm before writing a single line of code

2. The Interview Game Plan

- You know the rules: 30-minute countdown, communication matters

- You have a strategy: 6-step process that works every time

- You understand expectations: What interviewers really want to see

3. Two Classic Problems Solved

- Two Sum: Mastered the hash table technique

- Number of Islands: Conquered graph traversal

- You can implement: Both solutions from scratch

4. Professional Coding Standards

- Memory management: No more memory leaks

- Edge case handling: You think like a professional

- Code organization: Clean, readable, maintainable code

The 1000-Hour Rule: Your Path to Mastery

The Reality: Becoming a great problem solver takes time, but it is not about random practice.

The Science Behind It: The 1000-hour rule is based on research showing that achieving competence in a specific skill typically requires around 1000 hours of deliberate practice. This builds on Anders Ericsson's research on expertise and Malcolm Gladwell's popularization of the 10,000-hour rule for world-class mastery.

Your Structured Journey:

- Hours 0-100: Master the basics (Easy problems, Big O, common patterns)

- Hours 100-300: Build speed and accuracy (Medium problems, optimization)

- Hours 300-600: Advanced techniques (Hard problems, complex algorithms)

- Hours 600-1000: Interview mastery (mock interviews, system design)

Your Daily Commitment:

- Minimum: 30 minutes of focused practice

- Optimal: 1-2 hours of deliberate practice

- Maximum: 3-4 hours (avoid burnout)

Remember: Consistency beats intensity. 30 minutes daily > 4 hours once a week.

Reference: Ericsson, A., Krampe, R. T., & Tesch-Römer, C. (1993). The role of deliberate practice in the acquisition of expert performance. Psychological Review, 100(3), 363-406.

Your Learning Journey: Next Steps

Immediate Actions (This Week):

- Compile and Run: Execute the provided code examples on your system

- Modify Test Cases: Try different inputs to understand edge cases

- Implement Variations:

- Two Sum with brute force approach

- Number of Islands with BFS instead of DFS

- Language Practice: Implement the same problems in your preferred language

Short-term Goals (Next 2-4 Weeks):

- Daily Practice: Solve 1-2 problems daily on platforms like:

- LeetCode: Start with Easy problems, progress to Medium

- Pattern Recognition: Focus on common problem patterns:

- Two Pointers technique

- Sliding Window

- Binary Search variations

- Basic Graph algorithms (BFS/DFS)

- Time Management: Practice solving problems within time limits (20-30 minutes)

Medium-term Development (1-3 Months):

- Build Your Toolkit: Master these fundamental data structures:

- Arrays & Strings: Manipulation, searching, sorting

- Hash Tables: Frequency counting, duplicate detection

- Stacks & Queues: Bracket matching, BFS implementations

- Trees: Binary trees, BST operations, tree traversals

- Algorithm Mastery: Focus on these core algorithms:

- Sorting: Quick sort, merge sort, counting sort

- Searching: Binary search, linear search

- Graph Algorithms: BFS, DFS, shortest path basics

- Dynamic Programming: Start with simple problems like Fibonacci, climbing stairs

Long-term Excellence (3-6 Months):

- Advanced Topics: Progress to more complex algorithms:

- Advanced Graph Algorithms: Dijkstra's, topological sorting

- Dynamic Programming: Medium to hard problems

- Advanced Data Structures: Heaps, tries, segment trees

- Mock Interviews: Practice with realistic interview scenarios

- System Design: Begin learning system design concepts (for senior roles)

Study Resources & Platforms

Online Resources:

- Big O Cheat Sheet: https://www.bigocheatsheet.com/

- Visualgo: Algorithm visualizations

Mindset & Approach

The 1000-Hour Rule:

- Deliberate Practice: Focused, systematic practice beats random coding

- Consistency: 1-2 hours daily is better than 10 hours once a week

- Progressive Difficulty: Always push yourself slightly beyond your comfort zone

Problem-Solving Framework:

- Understand: Read the problem multiple times, identify key constraints

- Plan: Write down your approach before coding

- Implement: Write clean, working code

- Test: Verify with examples and edge cases

- Optimize: Look for better time/space complexity

- Review: Learn from each problem - what worked, what did not

Common Pitfalls to Avoid:

- Rushing to Code: Always understand the problem first

- Ignoring Edge Cases: Test with empty arrays, single elements, large inputs

- Not Communicating: Practice explaining your approach out loud

- Memorizing Solutions: Focus on understanding patterns, not specific code

- Skipping Easy Problems: Build confidence and speed with simpler problems

Success Metrics

Track Your Progress:

- Problems Solved: Aim for 100+ problems in 3 months

- Success Rate: Target 70%+ on first attempt for Easy, 50%+ for Medium

- Speed: Work towards solving Easy problems in 15 minutes, Medium in 25 minutes

- Pattern Recognition: Be able to identify problem types quickly

Interview Readiness Signs:

- Can solve Medium problems consistently within 30 minutes

- Comfortable explaining solutions clearly

- Can handle follow-up questions about optimization

- Confident with at least one programming language

- Understand time/space complexity of your solutions

Your Success Story Starts Today

The Truth: Right now, somewhere in the world, someone is solving the same problems you are learning. The difference between you and them? You have a plan, and they do not.

Your Competitive Advantage:

- You understand Big O notation - most candidates do not

- You have a systematic approach - most candidates wing it

- You know what interviewers expect - most candidates guess

- You have a 30-day transformation plan - most candidates have no plan

The Mindset Shift That Changes Everything

From: "I hope I can solve this problem" To: "I know exactly how to approach this problem"

From: "I will figure it out during the interview" To: "I have practiced this pattern 100 times"

From: "Maybe I will get lucky" To: "I have earned this through deliberate practice"

Your Daily Success Ritual

Every Morning (5 minutes):

- Review one algorithm concept

- Plan your practice session

- Set a specific goal for today

Every Evening (5 minutes):

- Reflect on what you learned

- Celebrate your progress (no matter how small)

- Plan tomorrow's practice

Every Week (30 minutes):

- Review your progress

- Adjust your strategy if needed

- Plan next week's focus areas

The 30-Day Challenge

I challenge you to commit to 30 days of focused practice:

Week 1: Build the foundation

Week 2: Master the patterns

Week 3: Build speed and accuracy

Week 4: Simulate real interviews

At the end of 30 days, you will be:

- Confident in your problem-solving abilities

- Fast at recognizing and implementing solutions

- Professional in your coding standards

- Ready for technical interviews

Your Legacy

Remember: You are not just learning to code. You are building a skill that will:

- Open doors to amazing career opportunities

- Solve real-world problems that impact millions

- Make you a better thinker in all areas of life

- Give you confidence to tackle any challenge

The skills you develop here will serve you for decades, not just for your next interview.

Your Action Plan (Right Now)

In the next 5 minutes:

- Bookmark this guide for easy reference

- Set a reminder for tomorrow's practice session

- Choose your first problem to solve

- Commit to the 30-day challenge

In the next 24 hours:

- Solve your first problem using the techniques you learned

- Implement Two Sum in your preferred language

- Explain Big O notation to someone (even if it is just yourself)

- Plan your Week 1 practice schedule

The Final Truth

Every expert was once exactly where you are right now.

- Dennis Ritchie started with simple programs

- Linus Torvalds began with basic algorithms

- Grace Hopper learned one concept at a time

The difference between them and everyone else?They started. They persisted. They practiced deliberately.

You have everything you need to succeed. The only question is:Will you start today?

Ready to Begin Your Journey?

"The best time to plant a tree was 20 years ago. The second best time is now." - Chinese Proverb

"Knowledge is like a garden; if it is not cultivated, it cannot be harvested." - Indian Proverb

Your tree is waiting to be planted. Start digging.

Your Next Move

Ready to put this into action? Here is your challenge:

Pick one problem today, timebox 25 minutes, review.

Whether it is implementing Two Sum in your favorite language or tackling Number of Islands with a fresh approach, the key is to start. Every expert was once exactly where you are right now.

Happy Coding, Future Expert!

"The only way to learn a new programming language is by writing programs in it." - Dennis Ritchie