Your Problem-Solving Companion (ItsAweso.me Series)

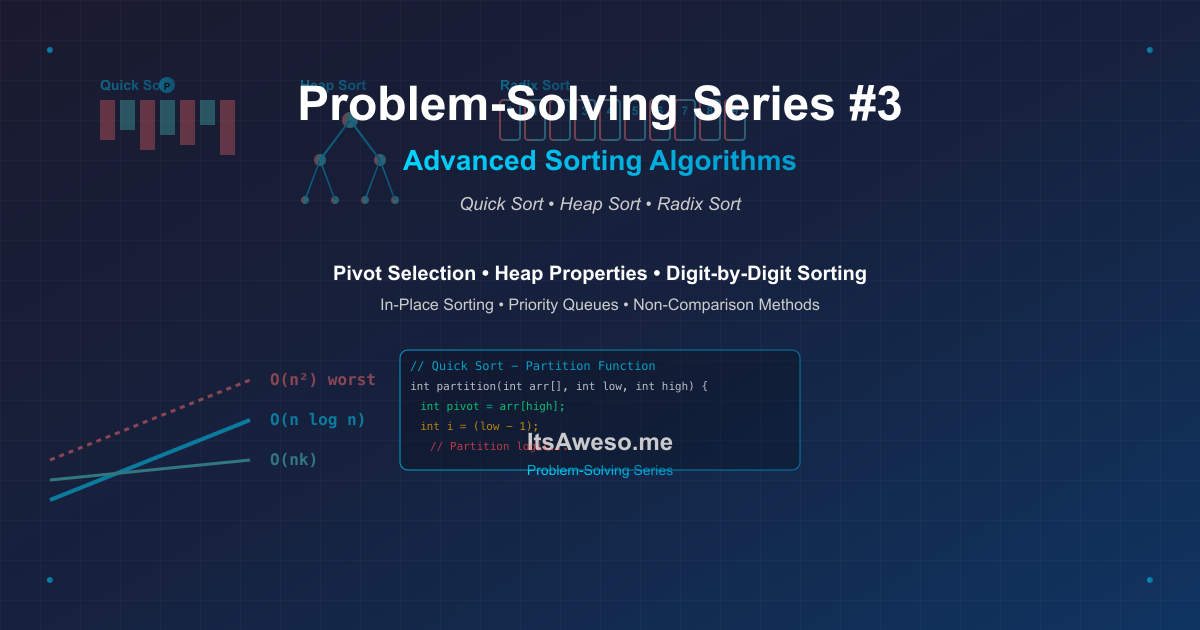

This guide continues your comprehensive study companion for mastering data structures and algorithms. Building upon the foundational sorting concepts from your previous sessions, you'll now explore three powerful advanced sorting algorithms that represent different paradigms in algorithm design.

How to Use This Guide:

- After reading: Review the algorithms and trace through the code examples

- Before practice: Refresh your understanding of sorting concepts and complexity analysis

- During interviews: Use as a quick reference for sorting algorithm characteristics

- For deeper learning: Implement each algorithm and experiment with different datasets

Remember: Real learning occurs through active practice and implementation. Use this guide to support your hands-on coding practice with advanced sorting algorithms.

Table of Contents

- Series Context: Building on Sorting Foundations

- Introduction: The Next Level of Sorting

- Quick Sort (1960): Tony Hoare's In-Place Champion

- Heap Sort (1964): J.W.J. Williams' Priority Queue Approach

- Radix Sort (1887 concept, 1960s algorithm): Herman Hollerith's Digit-by-Digit Method

- Algorithm Comparison and Selection Guide

- Real-World Applications

- Advanced Optimizations

- Practice Problems and Implementation Challenges

- Key Takeaways

- Next Steps in Our DSA Journey

- Visual Learning Resources

- Practice Problems

TL;DR

- Quick Sort (1960): The in-place champion with average O(n log n) performance, uses pivot selection and partitioning

- Heap Sort (1964): The priority queue approach with guaranteed O(n log n), uses binary heap data structure

- Radix Sort (1887 concept, 1960s algorithm): The non-comparison digit-by-digit method with O(nk) complexity

- Key insight: Each algorithm offers unique advantages - Quick Sort for average performance, Heap Sort for guaranteed complexity, Radix Sort for fixed-width keys

- Production systems: Modern languages use hybrid algorithms that combine the best of these approaches

- Learning progression: Master these advanced algorithms to understand different algorithmic paradigms

- Practice strategy: Implement each algorithm, understand their trade-offs, and know when to use each approach

Series Context: Building on Sorting Foundations

This post builds directly on the foundational sorting concepts you learned in your previous Problem-Solving Series sessions (see Problem-Solving Series #2: The Evolution of Sorting on ItsAweso.me). While this content stands alone, reviewing the basic sorting algorithms will enhance your understanding of these advanced techniques.

Teaching Flow: From Foundation to Advanced Implementation

Topic 1: Advanced Sorting Algorithm Analysis

The Problem: Many developers understand basic sorting but struggle with advanced algorithms and their specific use cases. This leads to suboptimal choices in production systems and poor performance on specific datasets.

The Solution: Understanding advanced sorting algorithms provides insight into different algorithmic paradigms and helps you choose the right tool for each situation.

The "Why" Before the "What"

Common Development Scenario:

- Developer: "I'll just use the built-in sort function"

- Senior Engineer: "What if you're sorting 10 million records with specific patterns?"

- Developer: "It should still work..."

- Senior Engineer: "Let's analyze the performance characteristics and choose the optimal algorithm..."

The Reality: Understanding advanced sorting algorithms enables you to make informed decisions about performance, stability, and memory usage in production systems.

Advanced Sorting Algorithms: Quick Sort, Heap Sort, and Radix Sort

Introduction: The Next Level of Sorting

In our previous exploration, we covered the evolution from simple insertion sort to sophisticated hybrid algorithms like Tim Sort. Now we dive deeper into three powerful sorting algorithms that represent different paradigms:

- Quick Sort (1960): The in-place champion with average O(n log n) performance

- Heap Sort (1964): The priority queue approach with guaranteed O(n log n)

- Radix Sort (1887 concept, 1960s algorithm): The non-comparison digit-by-digit method

Each algorithm offers unique insights into algorithm design and optimization strategies.

Quick Sort (1960): Tony Hoare's In-Place Champion

The Pivot Strategy

Quick Sort is like organizing a library by choosing a "pivot" book and arranging everything relative to it:

#include <stdlib.h>

#include <stdio.h>

// Assumes non-negative integers for simplicity

static inline void swap(int* a, int* b) {

int t = *a;

*a = *b;

*b = t;

}

// Median-of-three helper: orders (low, mid, high) and places median at high

void medianToHigh(int arr[], int low, int high) {

int mid = low + (high - low) / 2;

// Sort low, mid, high

if (arr[low] > arr[mid]) swap(&arr[low], &arr[mid]);

if (arr[low] > arr[high]) swap(&arr[low], &arr[high]);

if (arr[mid] > arr[high]) swap(&arr[mid], &arr[high]);

}

// Lomuto partition with improved duplicate handling

int partition(int arr[], int low, int high) {

// Use median-of-three to place good pivot at high

medianToHigh(arr, low, high);

int pivot = arr[high];

// Index of smaller element

int i = (low - 1);

for (int j = low; j <= high - 1; j++) {

// Changed from <= to < for better duplicate handling

if (arr[j] < pivot) {

i++; // Increment index of smaller element

swap(&arr[i], &arr[j]);

}

}

swap(&arr[i + 1], &arr[high]);

return (i + 1);

}

// Tail recursion elimination: always recurse into smaller partition first

void quickSort(int arr[], int low, int high) {

while (low < high) {

int pi = partition(arr, low, high);

// Always recurse into smaller partition first

if (pi - low < high - pi) {

quickSort(arr, low, pi - 1);

low = pi + 1; // Tail call optimization

} else {

quickSort(arr, pi + 1, high);

high = pi - 1; // Tail call optimization

}

}

}

// Test function

int main() {

int arr[] = {64, 34, 25, 12, 22, 11, 90};

int n = sizeof(arr) / sizeof(arr[0]);

quickSort(arr, 0, n - 1);

printf("Sorted array: ");

for (int i = 0; i < n; i++)

printf("%d ", arr[i]);

printf("\n");

return 0;

}

Alternative (Hoare partition):

// Hoare partition - more efficient but complex

int hoarePartition(int arr[], int low, int high) {

medianToHigh(arr, low, high);

int pivot = arr[high];

int i = low - 1;

int j = high + 1;

while (1) {

do { i++; } while (arr[i] < pivot);

do { j--; } while (arr[j] > pivot);

if (i >= j) return j;

swap(&arr[i], &arr[j]);

}

}

void quickSortHoare(int arr[], int low, int high) {

if (low < high) {

int p = hoarePartition(arr, low, high);

quickSortHoare(arr, low, p); // Note: includes pivot

quickSortHoare(arr, p + 1, high);

}

}

3-Way Partition (Dutch National Flag) for duplicate-heavy data:

// Three-way partition for handling many duplicates

void quickSort3(int arr[], int low, int high) {

if (low < high) {

medianToHigh(arr, low, high);

int pivot = arr[high];

int lt = low; // Elements < pivot

int gt = high; // Elements > pivot

int i = low; // Current element

while (i <= gt) {

if (arr[i] < pivot) {

swap(&arr[lt++], &arr[i++]);

} else if (arr[i] > pivot) {

swap(&arr[i], &arr[gt--]);

} else {

i++; // Equal to pivot

}

}

quickSort3(arr, low, lt - 1);

quickSort3(arr, gt + 1, high);

}

}

Visual Walkthrough:

Original: [64, 34, 25, 12, 22, 11, 90]

Pivot: 90 (after median-of-three)

Partition 1: [11, 34, 25, 12, 22, 64, 90] // 11 < 90, 64 < 90

Partition 2: [11, 12, 25, 34, 22, 64, 90] // 12 < 90, 34 < 90

Partition 3: [11, 12, 22, 34, 25, 64, 90] // 22 < 90, 25 < 90

Final: [11, 12, 22, 25, 34, 64, 90] // All elements sorted

Pivot Selection Strategies

1. Last Element (Simple but Risky):

int pivot = arr[high]; // Can lead to O(n^2) on sorted arrays

2. Random Pivot (Better):

int randomPivot = low + rand() % (high - low + 1);

swap(&arr[randomPivot], &arr[high]);

int pivot = arr[high];

3. Median-of-Three (Best for Practice):

// Already implemented in medianToHigh() function above

Complexity Analysis

| Metric | Best Case | Average Case | Worst Case |

|---|---|---|---|

| Time | O(n log n) | O(n log n) | O(n^2) |

| Space | O(log n) | O(log n) | O(n) |

Notes: Average stack depth is O(log n) due to tail recursion elimination, but worst-case remains O(n) for pathological inputs.

When to Use:

- Large datasets

- In-place sorting required

- Average case performance critical

- Cache-friendly (good locality)

When to Avoid:

- Guaranteed worst-case performance needed

- Nearly sorted data (without good pivot selection)

- Stable sorting required

Heap Sort (1964): J.W.J. Williams' Priority Queue Approach

The Heap Data Structure

Heap Sort uses a binary heap - a complete binary tree where each parent is larger than its children (max heap):

#include <stdlib.h>

// Assumes non-negative integers

static inline void swap(int* a, int* b) {

int t = *a;

*a = *b;

*b = t;

}

void heapify(int arr[], int n, int i) {

int largest = i; // Initialize largest as root

int left = 2 * i + 1; // Left child

int right = 2 * i + 2; // Right child

// If left child is larger than root

if (left < n && arr[left] > arr[largest])

largest = left;

// If right child is larger than largest so far

if (right < n && arr[right] > arr[largest])

largest = right;

// If largest is not root

if (largest != i) {

swap(&arr[i], &arr[largest]);

// Recursively heapify the affected sub-tree

heapify(arr, n, largest);

}

}

void heapSort(int arr[], int n) {

// Build max heap

for (int i = n / 2 - 1; i >= 0; i--)

heapify(arr, n, i);

// Extract elements from heap one by one

for (int i = n - 1; i > 0; i--) {

// Move current root to end

swap(&arr[0], &arr[i]);

// Call max heapify on the reduced heap

heapify(arr, i, 0);

}

}

// Test function

int main() {

int arr[] = {64, 34, 25, 12, 22, 11, 90};

int n = sizeof(arr) / sizeof(arr[0]);

heapSort(arr, n);

printf("Sorted array: ");

for (int i = 0; i < n; i++)

printf("%d ", arr[i]);

printf("\n");

return 0;

}

Visual Walkthrough:

Original Array: [64, 34, 25, 12, 22, 11, 90]

Step 1: Build Max Heap

90

/ \

34 25

/ \ / \

12 22 11 64

Step 2: Extract Max (90) and Heapify

64

/ \

34 25

/ \ /

12 22 11

Step 3: Continue until sorted

Final: [11, 12, 22, 25, 34, 64, 90]

Heap Properties

1. Complete Binary Tree: All levels filled except possibly the last 2. Heap Property: Parent ≥ Children (max heap) or Parent ≤ Children (min heap) 3. Array Representation: Parent at index i, children at 2i+1 and 2i+2

Complexity Analysis

| Metric | Best Case | Average Case | Worst Case |

|---|---|---|---|

| Time | O(n log n) | O(n log n) | O(n log n) |

| Space | O(1) | O(1) | O(1) |

When to Use:

- Guaranteed O(n log n) performance needed

- In-place sorting required

- Priority queue implementation

- Memory-constrained environments

When to Avoid:

- Cache performance critical (poor cache locality compared to quicksort)

- Stable sorting required

- Small datasets (overhead)

Radix Sort (1887 concept, 1960s algorithm): Herman Hollerith's Digit-by-Digit Method

The Non-Comparison Approach

Radix Sort is like sorting library books by ISBN digit by digit, from least significant to most significant:

Note: Standard LSD implementation requires non-negative integers. For negative numbers, either offset all values by the minimum negative value, or sort negatives by absolute value via radix, reverse that block, then merge with non-negatives.

#include <stdlib.h>

#include <string.h>

#include <stdio.h>

// Assumes non-negative integers

int getMax(int arr[], int n) {

int max = arr[0];

for (int i = 1; i < n; i++)

if (arr[i] > max)

max = arr[i];

return max;

}

void countSort(int arr[], int n, int exp) {

int *output = malloc(n * sizeof *output); // Dynamic allocation

int count[10] = {0}; // Count array for digits 0-9

// Store count of occurrences in count[]

for (int i = 0; i < n; i++)

count[(arr[i] / exp) % 10]++;

// Change count[i] so that count[i] now contains actual

// position of this digit in output[]

for (int i = 1; i < 10; i++)

count[i] += count[i - 1];

// Build the output array

for (int i = n - 1; i >= 0; i--) {

output[count[(arr[i] / exp) % 10] - 1] = arr[i];

count[(arr[i] / exp) % 10]--;

}

// Copy the output array to arr[]

for (int i = 0; i < n; i++)

arr[i] = output[i];

free(output); // Free allocated memory

}

void radixSort(int arr[], int n) {

// Find the maximum number to know number of digits

int max = getMax(arr, n);

// Do counting sort for every digit with overflow safety

for (long long exp = 1; max / exp > 0; exp *= 10)

countSort(arr, n, exp);

}

// Test function

int main() {

int arr[] = {170, 45, 75, 90, 802, 24, 2, 66};

int n = sizeof(arr) / sizeof(arr[0]);

radixSort(arr, n);

printf("Sorted array: ");

for (int i = 0; i < n; i++)

printf("%d ", arr[i]);

printf("\n");

return 0;

}

High-Performance Byte Radix Sort (32-bit integers):

// Byte-based radix sort for 32-bit integers (4 passes, radix 256)

void byteRadixSort(int arr[], int n) {

int *output = malloc(n * sizeof *output);

int count[256] = {0};

// Sort by each byte (least significant first)

for (int byte = 0; byte < 4; byte++) {

// Reset count array

memset(count, 0, sizeof(count));

// Count occurrences of each byte value

for (int i = 0; i < n; i++) {

int byte_val = (arr[i] >> (8 * byte)) & 0xFF;

count[byte_val]++;

}

// Calculate positions

for (int i = 1; i < 256; i++)

count[i] += count[i - 1];

// Build output array (stable sort)

for (int i = n - 1; i >= 0; i--) {

int byte_val = (arr[i] >> (8 * byte)) & 0xFF;

output[count[byte_val] - 1] = arr[i];

count[byte_val]--;

}

// Copy back to input array

memcpy(arr, output, n * sizeof(int));

}

free(output);

}

Visual Walkthrough:

Original: [170, 45, 75, 90, 802, 24, 2, 66]

Step 1: Sort by least significant digit (1s place)

170, 90, 802, 2, 24, 45, 75, 66

Step 2: Sort by 10s place

802, 2, 24, 45, 66, 170, 75, 90

Step 3: Sort by 100s place

2, 24, 45, 66, 75, 90, 170, 802

Final: [2, 24, 45, 66, 75, 90, 170, 802]

Radix Sort Variations

1. LSD (Least Significant Digit) Radix Sort:

- Sorts from rightmost digit to leftmost

- Stable sort

- Most common implementation

2. MSD (Most Significant Digit) Radix Sort:

- Sorts from leftmost digit to rightmost

- Can be faster for some data distributions

- More complex implementation

3. String Radix Sort:

void stringRadixSort(char* arr[], int n, int maxLen) {

// Sort strings by each character position

// Note: Variable-length strings must be padded with sentinel (e.g., '\0')

// for fixed-position passes. Stability is required.

for (int pos = maxLen - 1; pos >= 0; pos--)

countSortStrings(arr, n, pos);

}

Complexity Analysis

| Metric | Best Case | Average Case | Worst Case |

|---|---|---|---|

| Time | O(nk) | O(nk) | O(nk) |

| Space | O(n + k) | O(n + k) | O(n + k) |

Where k is the number of digits in the maximum number.

When to Use:

- Fixed-width keys (integers, fixed-length strings)

- Large datasets with small key ranges

- When comparison-based sorts are too slow

- Stable sorting required

When to Avoid:

- Variable-width keys

- Memory-constrained environments

- Small datasets (overhead)

Algorithm Comparison and Selection Guide

Performance Comparison

| Algorithm | Best Case | Average Case | Worst Case | Space | Stable | In-Place |

|---|---|---|---|---|---|---|

| Quick Sort | O(n log n) | O(n log n) | O(n^2) | O(n) | No | Yes |

| Heap Sort | O(n log n) | O(n log n) | O(n log n) | O(1) | No | Yes |

| Radix Sort | O(nk) | O(nk) | O(nk) | O(n + k) | Yes | No |

Note: Quick Sort space complexity is O(n) worst case, but tail recursion elimination reduces average stack depth to O(log n).

Selection Guidelines

Choose Quick Sort when:

- Average performance is critical

- In-place sorting needed

- Cache performance matters

- You can tolerate occasional O(n^2) cases

Choose Heap Sort when:

- Guaranteed O(n log n) performance required

- Memory is extremely limited

- Priority queue implementation needed

Choose Radix Sort when:

- Keys have fixed width

- Keys are integers or fixed-length strings

- Comparison-based sorts are too slow

- Stable sorting required

Real-World Applications

Quick Sort

- Programming language libraries: C's qsort(), C++'s std::sort()

- Database systems: Index creation, query optimization

- Game development: Collision detection, spatial partitioning

Heap Sort

- Operating systems: Process scheduling, memory management

- Network protocols: Priority queues for packet routing

- Embedded systems: Real-time task scheduling

Radix Sort

- Database systems: Sorting large datasets with integer keys

- Network routing: IP address sorting

- File systems: Directory listing, file organization

Advanced Optimizations

Quick Sort Optimizations

1. Dual-Pivot Quick Sort:

#include <stdlib.h>

// Assumes non-negative integers

static inline void swap(int* a, int* b) {

int t = *a;

*a = *b;

*b = t;

}

void dualPivotQuickSort(int arr[], int left, int right) {

if (right - left < 27) {

insertionSort(arr, left, right);

return;

}

// Choose two pivots

if (arr[left] > arr[right]) swap(&arr[left], &arr[right]);

int pivot1 = arr[left];

int pivot2 = arr[right];

// Three-way partitioning

int less = left + 1;

int great = right - 1;

int k = less;

while (k <= great) {

if (arr[k] < pivot1) {

swap(&arr[k], &arr[less++]);

} else if (arr[k] > pivot2) {

while (k < great && arr[great] > pivot2) great--;

swap(&arr[k], &arr[great--]);

if (arr[k] < pivot1) {

swap(&arr[k], &arr[less++]);

}

}

k++;

}

swap(&arr[left], &arr[--less]);

swap(&arr[right], &arr[++great]);

dualPivotQuickSort(arr, left, less - 1);

dualPivotQuickSort(arr, less + 1, great - 1);

dualPivotQuickSort(arr, great + 1, right);

}

2. Intro Sort (Hybrid):

- Combines Quick Sort, Heap Sort, and Insertion Sort

- Switches to Heap Sort if Quick Sort recursion depth exceeds limit

- Uses Insertion Sort for small subarrays

Heap Sort Optimizations

1. Bottom-Up Heap Construction:

void buildHeapBottomUp(int arr[], int n) {

for (int i = n / 2 - 1; i >= 0; i--)

heapify(arr, n, i);

}

2. Heap Sort with Floyd's Optimization:

- Reduces number of comparisons during heap construction

- More efficient for large datasets

Radix Sort Optimizations

1. Counting Sort Optimization:

- Use bit manipulation for power-of-2 radices

- Reduce memory allocation overhead

2. MSD Radix Sort with Early Termination:

- Stop sorting when subarrays are small enough

- Switch to insertion sort for small partitions

Practice Problems and Implementation Challenges

LeetCode Problems (Interview Focus)

- LeetCode 912: Sort an Array

- Difficulty: Medium

- Implement: Quick Sort, Merge Sort, Heap Sort

- Key Learning: Algorithm comparison, stability considerations

- Interview Tip: Know when to use each algorithm

- LeetCode 148: Sort List

- Difficulty: Medium

- Implement: Merge Sort for linked lists

- Key Learning: In-place sorting constraints, pointer manipulation

- Interview Tip: Linked list sorting is common in interviews

- LeetCode 179: Largest Number

- Difficulty: Medium

- Implement: Custom comparator with string sorting

- Key Learning: Custom sorting criteria, string manipulation

- Interview Tip: Custom comparators are frequently tested

- LeetCode 215: Kth Largest Element

- Difficulty: Medium

- Implement: QuickSelect algorithm

- Key Learning: Selection algorithms, partition-based approach

- Interview Tip: Selection problems are very common

- LeetCode 347: Top K Frequent Elements

- Difficulty: Medium

- Implement: Bucket sort with frequency counting

- Key Learning: Frequency-based sorting, bucket sort application

- Interview Tip: Frequency problems often use sorting

Advanced Interview Problems

- LeetCode 315: Count of Smaller Numbers After Self

- Difficulty: Hard

- Implement: Merge sort with inversion counting

- Key Learning: Divide-and-conquer with additional tracking

- Interview Tip: Shows advanced understanding of merge sort

- LeetCode 493: Reverse Pairs

- Difficulty: Hard

- Implement: Merge sort with pair counting

- Key Learning: Modified merge sort for counting problems

- Interview Tip: Demonstrates algorithm modification skills

Implementation Challenges (Interview Practice)

Challenge 1: Hybrid Sort Implementation

// Implement a hybrid sort that automatically chooses the best algorithm

void hybridSort(int arr[], int n) {

if (n < 16) {

insertionSort(arr, n);

} else if (n < 1000) {

quickSort(arr, 0, n - 1);

} else {

heapSort(arr, n);

}

}

Interview Focus: Algorithm selection, understanding trade-offs

Challenge 2: Stable Quick Sort

// Implement a stable version of Quick Sort

void stableQuickSort(int arr[], int n) {

// Hint: Use extra space to maintain stability

// Consider using indices or pairs with original positions

}

Interview Focus: Understanding stability, space-time trade-offs

Challenge 3: In-Place Radix Sort

// Implement an in-place version of Radix Sort

void inPlaceRadixSort(int arr[], int n) {

// Hint: Use cyclic permutations

// Challenge: Maintain O(nk) time complexity

}

Interview Focus: Advanced algorithm modification, optimization

Challenge 4: Custom Comparator Implementation

#include <stdlib.h>

#include <string.h>

// Implement sorting with custom comparator for objects

typedef struct {

int id;

char name[50];

int priority;

} Task;

// Sort by priority (descending), then by name (ascending)

int compareTasks(const void* a, const void* b) {

Task* taskA = (Task*)a;

Task* taskB = (Task*)b;

if (taskA->priority != taskB->priority) {

return taskB->priority - taskA->priority; // Descending

}

return strcmp(taskA->name, taskB->name); // Ascending

}

Interview Focus: Custom sorting criteria, struct handling

Challenge 5: Partial Sort Implementation

// Implement partial sort to find top K elements

void partialSort(int arr[], int n, int k) {

// Hint: Use heap sort or quick select

// Goal: First k elements should be sorted

}

Interview Focus: Partial sorting, selection algorithms

Key Takeaways

- Quick Sort: The practical champion with excellent average-case performance

- Heap Sort: The guaranteed performer with predictable O(n log n) complexity

- Radix Sort: The non-comparison specialist for fixed-width keys

Algorithm Selection Framework

IF keys are fixed-width integers/strings AND n is large:

Use Radix Sort

ELSE IF guaranteed worst-case performance needed:

Use Heap Sort

ELSE IF average performance and cache efficiency matter:

Use Quick Sort

ELSE IF stable sorting required:

Use Merge Sort or Radix Sort

ELSE IF memory is extremely limited:

Use Heap Sort

ELSE:

Use Quick Sort (default choice)

Interview Preparation Checklist

Must-Know Concepts:

- Time/Space Complexity of all sorting algorithms

- Stability of each algorithm

- In-place vs extra space requirements

- Best/Worst/Average case scenarios

- When to use each algorithm

Common Interview Questions:

- "What's the time complexity of Quick Sort?"

- Answer: O(n log n) average, O(n^2) worst case

- Follow-up: "When does worst case occur?"

- "How would you sort a linked list?"

- Answer: Merge Sort (stable, O(n log n), works well with pointers)

- "What's the difference between Quick Sort and Merge Sort?"

- Answer: In-place vs extra space, stability, worst-case performance

- "When would you use Heap Sort?"

- Answer: When guaranteed O(n log n) needed, memory-constrained environments

- "How would you find the Kth largest element?"

- Answer: QuickSelect algorithm (O(n) average case)

Advanced Interview Topics:

- Custom comparators for complex objects

- Partial sorting for top-K problems

- Stable sorting requirements

- External sorting for large datasets

- Hybrid algorithms (Tim Sort, Intro Sort)

Next Steps in Our DSA Journey

In our upcoming sessions, we'll explore:

- Searching Algorithms: Linear Search, Binary Search, Advanced Search Techniques

- Graph Algorithms: DFS, BFS, Shortest Path, Connected Components

- Dynamic Programming: Memoization, Tabulation, Classic Problems

- Advanced Data Structures: Trees, Heaps, Hash Tables

"The best algorithm is the one you understand and can implement correctly." - Unknown

Reference: This article builds upon the foundational sorting concepts introduced in our Problem-Solving Series. For more advanced topics, continue following our comprehensive DSA learning journey.

Visual Learning Resources:

- Visualgo - Quick Sort: Watch pivot selection and partitioning

- Visualgo - Heap Sort: See heap construction and extraction

- Sorting Algorithm Animations: Compare all algorithms side-by-side

Practice Problems:

- LeetCode 912: Sort an Array

- LeetCode 148: Sort List

- LeetCode 179: Largest Number

- LeetCode 215: Kth Largest Element

- LeetCode 347: Top K Frequent Elements

Remember: Master the fundamentals with simple implementations before tackling advanced LeetCode problems. This structured approach builds a stronger foundation.

Ready to master advanced sorting? Practice implementing these algorithms and understand when to use each one!

Interview Success Metrics

By the End of This Sorting Series, You Should Be Able To:

- Implement all major sorting algorithms from memory

- Explain time/space complexity of each algorithm

- Choose the right algorithm for given constraints

- Optimize sorting solutions for specific problems

- Handle edge cases and special requirements

- Discuss trade-offs between different approaches

Interview Readiness Checklist:

- Can implement Quick Sort with good pivot selection

- Can implement Merge Sort for linked lists

- Can implement Heap Sort with heapify operations

- Can implement Radix Sort for integer arrays

- Can write custom comparators for complex objects

- Can solve sorting-related LeetCode problems in 20-30 minutes

- Can explain algorithm choices and trade-offs clearly

"Sorting is not just about putting things in order; it's about understanding the structure of data and the efficiency of algorithms." - Adapted from Donald Knuth

Comprehensive Sorting Algorithm Landscape

Popular Sorting Algorithms Across Programming Languages

| Algorithm | C/C++ | Java | Python | JavaScript | Go | Rust | Applications |

|---|---|---|---|---|---|---|---|

| Quick Sort | qsort() | Arrays.sort() (primitives) | list.sort() (Timsort) | Array.sort() (Timsort) | sort.Ints() | slice.sort() | General-purpose, libraries |

| Merge Sort | Manual | Arrays.sort() (objects) | sorted() (Timsort) | Array.sort() (Timsort) | sort.Slice() | slice.sort_by() | Stable sorting, external sort |

| Heap Sort | Manual | PriorityQueue | heapq | Manual | container/heap | BinaryHeap | Priority queues, guaranteed O(n log n) |

| Insertion Sort | Manual | Manual | Manual | Manual | Manual | Manual | Small arrays, nearly sorted data |

| Bubble Sort | Manual | Manual | Manual | Manual | Manual | Manual | Educational, simple implementations |

| Selection Sort | Manual | Manual | Manual | Manual | Manual | Manual | Educational, in-place sorting |

| Shell Sort | Manual | Manual | Manual | Manual | Manual | Manual | Medium-sized arrays, gap sequences |

| Radix Sort | Manual | Manual | Manual | Manual | Manual | Manual | Fixed-width keys, integers |

| Bucket Sort | Manual | Manual | Manual | Manual | Manual | Manual | Uniformly distributed data |

| Counting Sort | Manual | Manual | Manual | Manual | Manual | Manual | Small range integers |

| Tim Sort | Manual | Arrays.sort() (objects) | list.sort() | Array.sort() | Manual | Manual | Production systems, hybrid |

| Intro Sort | std::sort() | Manual | Manual | Manual | Manual | Manual | C++ standard library |

Language-Specific Implementations

C/C++ Standard Library

#include <algorithm>

#include <vector>

std::vector<int> arr = {64, 34, 25, 12, 22, 11, 90};

// Quick Sort (Introsort: quicksort + depth-limit fallback to heapsort + insertion sort for small ranges)

std::sort(arr.begin(), arr.end());

// Stable sort (Merge Sort)

std::stable_sort(arr.begin(), arr.end());

// Partial sort (Heap Sort)

std::partial_sort(arr.begin(), arr.begin() + 3, arr.end());

Java Collections Framework

import java.util.*;

int[] arr = {64, 34, 25, 12, 22, 11, 90};

// Dual-Pivot Quick Sort for primitives

Arrays.sort(arr);

// Tim Sort for objects

List<Integer> list = Arrays.asList(64, 34, 25, 12, 22, 11, 90);

Collections.sort(list);

// Priority Queue (Heap)

PriorityQueue<Integer> pq = new PriorityQueue<>();

Python Built-in Sorting

arr = [64, 34, 25, 12, 22, 11, 90]

# Tim Sort (default)

arr.sort() # In-place

sorted_arr = sorted(arr) # New list

# Custom sorting

arr.sort(key=lambda x: x % 10) # Sort by last digit

JavaScript Array Methods

let arr = [64, 34, 25, 12, 22, 11, 90];

// Tim Sort (V8 engine)

arr.sort((a, b) => a - b);

// Custom sorting

arr.sort((a, b) => a.toString().localeCompare(b.toString()));

Note: Go's sort uses a quicksort hybrid with worst-case safeguards.

Specialized Sorting Algorithms

1. Cocktail Sort (Bidirectional Bubble Sort)

#include <stdlib.h>

// Assumes non-negative integers

static inline void swap(int* a, int* b) {

int t = *a;

*a = *b;

*b = t;

}

void cocktailSort(int arr[], int n) {

int swapped = 1;

int start = 0;

int end = n - 1;

while (swapped) {

swapped = 0;

// Forward pass

for (int i = start; i < end; i++) {

if (arr[i] > arr[i + 1]) {

swap(&arr[i], &arr[i + 1]);

swapped = 1;

}

}

if (!swapped) break;

swapped = 0;

end--;

// Backward pass

for (int i = end - 1; i >= start; i--) {

if (arr[i] > arr[i + 1]) {

swap(&arr[i], &arr[i + 1]);

swapped = 1;

}

}

start++;

}

}

Applications: Nearly sorted data, educational purposes

2. Gnome Sort (Stupid Sort)

#include <stdlib.h>

// Assumes non-negative integers

static inline void swap(int* a, int* b) {

int t = *a;

*a = *b;

*b = t;

}

void gnomeSort(int arr[], int n) {

int index = 0;

while (index < n) {

if (index == 0) index++;

if (arr[index] >= arr[index - 1]) index++;

else {

swap(&arr[index], &arr[index - 1]);

index--;

}

}

}

Applications: Simple implementations, embedded systems

3. Comb Sort

#include <stdlib.h>

// Assumes non-negative integers

static inline void swap(int* a, int* b) {

int t = *a;

*a = *b;

*b = t;

}

void combSort(int arr[], int n) {

int gap = n;

int swapped = 1;

while (gap != 1 || swapped == 1) {

gap = (gap * 10) / 13;

if (gap < 1) gap = 1;

swapped = 0;

for (int i = 0; i < n - gap; i++) {

if (arr[i] > arr[i + gap]) {

swap(&arr[i], &arr[i + gap]);

swapped = 1;

}

}

}

}

Applications: Bubble sort improvement, gap sequences

4. Cycle Sort

#include <stdlib.h>

// Assumes non-negative integers

static inline void swap(int* a, int* b) {

int t = *a;

*a = *b;

*b = t;

}

void cycleSort(int arr[], int n) {

for (int cycle_start = 0; cycle_start < n - 1; cycle_start++) {

int item = arr[cycle_start];

int pos = cycle_start;

for (int i = cycle_start + 1; i < n; i++)

if (arr[i] < item) pos++;

if (pos == cycle_start) continue;

while (item == arr[pos]) pos++;

int temp = item;

item = arr[pos];

arr[pos] = temp;

while (pos != cycle_start) {

pos = cycle_start;

for (int i = cycle_start + 1; i < n; i++)

if (arr[i] < item) pos++;

while (item == arr[pos]) pos++;

temp = item;

item = arr[pos];

arr[pos] = temp;

}

}

}

Applications: Minimizing memory writes, write-once memory

Industry Applications by Domain

Database Systems

- B-Tree Indexes: Balanced tree structures for efficient range queries

- External Sorting: Merge sort variants for disk-based operations

- Query Optimization: Cost-based sorting for join operations

Operating Systems

- Process Scheduling: Priority queues (heap sort)

- Memory Management: Buddy system allocation

- File Systems: Directory listing, file organization

Web Applications

- Search Results: Relevance-based sorting

- E-commerce: Price, rating, popularity sorting

- Social Media: Feed algorithms, content ranking

Game Development

- Rendering: Z-buffer depth sorting

- Collision Detection: Spatial partitioning

- AI: Pathfinding, decision trees

Scientific Computing

- Data Analysis: Statistical sorting

- Machine Learning: Feature ranking

- Simulation: Event scheduling

Next in the Series: The Art of Searching

"The best search algorithm is the one that finds what you're looking for in the least amount of time."

Introduction to Searching Algorithms

Searching is the complement to sorting - once data is organized, we need efficient ways to find specific elements. Our next exploration will cover:

1. Linear Search: The Foundation

- Concept: Sequential examination of elements

- Complexity: O(n) time, O(1) space

- Applications: Unsorted data, small datasets

- Optimizations: Sentinel values, early termination

2. Binary Search: The Divide-and-Conquer Champion

- Concept: Halving the search space in each iteration

- Complexity: O(log n) time, O(1) space (iterative)

- Prerequisites: Sorted data

- Variations: Lower bound, upper bound, range queries

3. Interpolation Search: The Intelligent Guess

- Concept: Using value distribution to estimate position

- Complexity: O(log log n) average, O(n) worst case

- Applications: Uniformly distributed data

- Trade-offs: Better average case, worse worst case

4. Exponential Search: The Unbounded Approach

- Concept: Finding range before binary search

- Complexity: O(log n) time

- Applications: Unbounded arrays, streaming data

- Advantages: Works without knowing array size

5. Jump Search: The Block Strategy

- Concept: Jumping fixed steps, then linear search

- Complexity: O(√n) time

- Applications: Sorted arrays, block-based storage

- Optimization: Optimal jump size calculation

Advanced Searching Techniques

1. Hash-Based Searching

- Hash Tables: O(1) average case lookup

- Collision Resolution: Chaining, open addressing

- Applications: Databases, caches, symbol tables

2. Tree-Based Searching

- Binary Search Trees: O(log n) average case

- AVL Trees: Self-balancing guarantees

- Red-Black Trees: Efficient insertions/deletions

- B-Trees: Disk-based searching

3. String Searching

- Naive String Matching: O(mn) complexity

- KMP Algorithm: O(m+n) preprocessing and search

- Boyer-Moore: Right-to-left scanning

- Rabin-Karp: Hash-based string matching

Real-World Search Applications

Web Search Engines

- Inverted Indexes: Word-to-document mapping

- PageRank: Link-based relevance scoring

- Query Processing: Boolean operations, phrase matching

Database Systems

- Index Structures: B-trees, hash indexes

- Query Optimization: Cost-based plan selection

- Full-Text Search: Text indexing and retrieval

Operating Systems

- File Systems: Directory traversal, file lookup

- Memory Management: Page tables, virtual memory

- Process Management: Process scheduling, resource allocation

Artificial Intelligence

- Pathfinding: A*, Dijkstra's algorithm

- Game Trees: Minimax, alpha-beta pruning

- Pattern Recognition: Template matching, feature detection

Search Algorithm Selection Framework

IF data is unsorted AND dataset is small:

Use Linear Search

ELSE IF data is sorted AND random access available:

Use Binary Search

ELSE IF data is uniformly distributed:

Use Interpolation Search

ELSE IF array size is unknown:

Use Exponential Search

ELSE IF data is in blocks:

Use Jump Search

ELSE IF O(1) lookup needed:

Use Hash Table

ELSE IF range queries needed:

Use Tree-based search

ELSE:

Use Binary Search (default choice)

Upcoming Search Algorithm Deep Dives

- Binary Search Variations

- Lower bound, upper bound implementations

- Rotated array searching

- Peak finding in mountain arrays

- String Matching Algorithms

- KMP algorithm with failure function

- Boyer-Moore with bad character rule

- Regular expression matching

- Tree-Based Searching

- Binary search tree operations

- Self-balancing tree implementations

- B-tree for external storage

- Advanced Search Techniques

- Bloom filters for approximate membership

- Locality-sensitive hashing

- Geometric searching (kd-trees)

"Searching is not just about finding; it's about finding efficiently." - Adapted from Donald Knuth

Stay tuned for our comprehensive exploration of searching algorithms in the next installment of the DSA Series!